Resource Pricing and TFM Design in Ethereum (Part 1 - Blockchain Resources)

Fantastic resources and how they distribute them (in Ethereum)

Setting the stage

Over the last decade, Ethereum has emerged as one of the first working examples of a decentralised economic system capable of supporting varying degrees of complex interactions between users in trustless and permissionless manner.

Given the nature of the decentralised domain, building such an economic system has come with many unique challenges which generally are not prevalent in the domains of centrally-planned and mixed economies that we are more familiar with today. Given the unique setting, we find that many of the proposed solutions and mechanism design approaches adopted by Ethereum consider aspects of both neoclassical and complexity economics.

This demonstrates the sort of “uncharted waters” we are dealing with here, therefore it will be a goal of ours with this article series to help describe Ethereum’s economic system and highlight some of the key features that characterise it and enable it to work in the decentralised setting. While we will not go over the economic system in its entirety, a higher level overview of what it entails will be covered later in this introductory section. Primarily, we will be focused on understanding the resources that drive Ethereum, how they are translated to demanded goods/services (and what these goods/services are), and the systems responsible for their allocation and distribution.

Defining an economic system

Before we get into it, lets go over a basic definition of an economic system:

An economic system is a system of production, resource allocation and distribution of goods and services within a society.

It includes the combination of various actors, decision-making processes, and patterns of consumption that comprise the economic structure of a given community.

With this context, there are a few details we can identify that characterise an economic system:

System of production: How many resources are made available in the system for the production of goods/services and what are the methods through which these resources are processed into these goods/services.

Resource allocation: How are the resources made available allocated to the production of goods/services.

Patterns of consumption: What are the patterns of resource consumption that influence decisions made around what goods/services to produce for users.

Distribution of goods and services: How is the exchange of goods/services between producers and users (consumers) managed.

Various actors: Who are the actors involved and what roles do they play in the system.

Decision-making processes: What guides the design of the systems and/or rules that drive the system of production, resource allocation policy, and method of distribution of goods/services.

While the above is by no means a comprehensive blueprint for an economic system, it gives us a starting point to reason about Ethereum and its economic characteristics.

What is this article series about?

The goal of this article series is to describe the parts of Ethereum that together represent the most crucial parts of its economic system and drive its activity. In doing this, we will also get a clear picture of the system architecture behind this system.

To achieve this goal, we have two tasks ahead of us:

Understand what Ethereum resources are and what goods/services are produced from them.

Understand how produced goods/services are distributed to users.

These tasks will be approached in three interrelated parts (each structured in their own article, starting with Part 1 in this read):

Part 1: Blockchain Resources: This article identifies what the underlying resources of Ethereum are and does a deep dive into the characteristics of these resources. A deep dive into these characteristics will help give a strong understanding of these resources which are constantly being utilised, reshaped into products, and consumed resulting in economic activity.

Part 2: Resource Pricing: This article describes the mechanism by which Ethereum assigns resource costs associated with the production of goods/services. This will give insight into how different patterns of resource usage are priced as well as explaining what guides the pricing policies implemented and the goals of the mechanism.

Part 3: Transaction Fee Mechanism: This article describes the mechanism through which goods/services produced from blockchain resources are distributed and paid for. You can understand this as being something that focuses on the delivery of the produced goods/services to consumers. The implementation of such a mechanism is driven by goals of realising the highest levels of economic efficiency possible within the available constraints (constraints relevant to the decentralised domain in particular).

While these topics may seem highly-specific, you may find that it answers a lot of different questions about Ethereum and how it actually works beyond the basic picture of “its a blockchain”.

Ethereum as an economic system

In the beginning of this article we briefly defined what an economic system was and listed out some characteristics. The motivation behind this was to provide a basic blueprint for a simple economic system so that, in approaching Ethereum, we can acknowledge where these characteristics emerge in its designed architecture.

As mentioned previously, there are three parts that make up this article series (blockchain resources, resource pricing, transaction fee mechanism), each having important roles in the economic system model of Ethereum. The diagram below illustrates where we will encounter the economic system characteristics identified earlier:

Basic modelling of Ethereum’s economic system

To start off with this, it is worth distinguishing resources from mechanisms. In outlining the structure of this article series, you can observe that Part 2 (Resource Pricing) and Part 3 (Transaction Fee Mechanism) explore mechanisms within Ethereum’s economic framework while Part 1 (Blockchain Resources) describes the foundational resources that interact with both these mechanisms, albeit in different ways.

The diagram above helps illustrate how these two mechanisms together determine how the foundational resources in Ethereum are put to use. Lets now describe each mechanism so we can get an idea of what role they play in allocating resources to users.

Resource Pricing: Determines resource costs of transactions

This mechanism is responsible for both assigning costs to patterns of resource use as well as metering total resource consumption of transactions. Because transactions can be broken down into chains of lower-level operation (each with their own individual resource costs), a system of determining the cumulative amount of resources consumed by transactions is needed. The individual resource costs of lower-level operations that can be used by a transaction are dictated by a specification known as the resource pricing policy.

A key design goal of the resource pricing mechanism is to price transactions as close as possible to their true social costs, representing as many external costs as possible within the total transaction cost. A crucial requirement in achieving this goal lies with the resource pricing policy applying appropriate relative cost pricing among the various lower-level operations leveraged by transactions which impose different social costs upon Ethereum.

This transaction cost is expressed as a scalar quantity of resources.

Transaction Fee Mechanism (TFM): Determines which transactions get executed and how much are paid for them

Every block in Ethereum represents a finite amount of resources that are made available for consumption (by transactions), and with every block there is a single block producer. The motivation for limiting the resource consumption in block periods is necessary to ensure the operation of the network in a decentralised manner. The TFM is a mechanism that helps guide the interactions between users making transaction requests and the block producers responsible for allocating the available resource supply of each block. In addition to this, it is also responsible for facilitating the exchange of payment for transaction inclusion between users and a block producer. Such user payments are a function of the transaction resource cost (produced by the resource pricing mechanism) and a bid (incentive signal to block producer).

The goal of the TFM is to produce an outcome where a block is produced reflecting the highest level of allocative efficiency possible. This comes with challenges in Ethereum because the relationship between users and block producers are characterised by the principal-agent problem. The nature of this challenge therefore influences some of the design decisions made with respect to the TFM (e.g. incentive design) to help align the interests of principals (users) and the agent (the block producer). On both sides of actors, the TFM seeks to ensure that only through honest interaction with the mechanism can each self-interested group of actors get the best outcomes for themselves. For block producers, this is the highest revenue possible from block production, and for users, this is cost-effective and timely transaction execution resulting in them receiving private benefits.

Transaction fees are expressed as a value of ETH and are a function of the resource cost of a transaction and a bid amount to be paid per scalar unit of resource.

Note: There is no need to worry if you are currently reading this and don’t fully understand how these two mechanisms work or what they achieve - I am simply providing some very condensed context around what they both roughly describe. After thoroughly understanding the resources involved, Part 2 and 3 describing these mechanisms will make sense.

Together these two mechanisms show how transaction requests are managed within Ethereum where the resource pricing mechanism determines the resource cost of a transaction and then the TFM helps determine how much is ultimately paid for them while also guiding how the available resources are allocated per block. We can represent both these mechanisms as a single subsystem which we will refer to as the resource allocation system.

The resource allocation system is a subsystem that integrates two different mechanisms. It effectively links the resource pricing mechanism together with the TFM.

We can understand this subsystem as something that is directly responsible for guiding the optimal allocation of the underlying blockchain resources in Ethereum resulting in economic activity.

The output of this system (the optimal allocation referred to above) is a list of transactions that will be executed as part of the next block in Ethereum yielding an approximate level of optimal welfare from the interaction.

Having defined this subsystem, we now have sufficient information to roughly describe a conceptual model for Ethereum’s economic system. While the economic activity within Ethereum is largely influenced by the resource allocation system that was described above, there are certainly other factors that play a role. We illustrate this idea below:

The diagram above illustrates that there are potentially multiple other factors that influence economic activity within Ethereum. These influences outside of the resource allocation system will not be covered in this blog post, but here is what they roughly describe:

Monetary Policy: Aspects that affect Ethereum’s circulating supply on the native asset ETH, such as validator rewards and burning (the burning of ETH is mechanism design decision related to the implementation of the transaction fee mechanism but an effect of this feature is experienced within monetary policy - more on this later).

Cryptoeconomic Security: The consensus protocol influences validator rewards and network security which impacts the economic dynamics of Ethereum.

Macroeconomic Influence: Describes the effect that external market factors may have on Ethereum’s economic system, such as demand for ETH, price volatility, regulatory risk and much more.

Its worth mentioning that all these mentioned areas within Ethereum’s economic model do interact with one another, however we will be focusing more on the interactions between the mechanisms of the resource allocation system in helping Ethereum achieve its goals around economic efficiency as well as decentralisation (more on this later).

So now that the tone has been set for this article series, I hope you are ready to start diving into what I hope will be an informative read. The goal will be to convince you of how all these interconnected parts come together to produce some interesting outcomes - it is testament to how interdisciplinary expertise has the potential to change the way we do things (economics, technology, engineering). While this will be fairly technical, I hope to leave you with a lot of food for thought in a similar way to how these topics stirred my curiosity.

Anyways, lets get started 🫡.

Prologue: The Tragedy of the Blockchain Commons

“Therein is the tragedy. Each man is locked into a system that compels him to increase his herd without limit – in a world that is limited. Ruin is the destination toward which all men rush, each pursuing his own best interest in a society that believes in the freedom of the commons”

— Garrett Hardin, The Tragedy of the Commons

Ethereum, by nature of its design, is a decentralised economic system. There are strong reasons to emphasise this detail repeatedly because this characteristic of being “decentralised” is only made possible by ensuring the ability to permissionlessly join the network and perform crucial roles that maintain its operation, remains accessible.

An important requirement for having such a property is to ensure the resource requirements to perform such duties remains modest and predictable. Without careful planning that considers this goal, we risk Ethereum losing its valuable properties afforded by decentralisation.

However at the same time, an economic system must be able to operate efficiently to serve those who participate in it, and must demonstrate elements of sustainability and reliability as a medium of commerce. Given this understanding, the ideal outcome is that we maintain the decentralised properties of the system (by bounding resource consumption) but try to make use of the available resources as efficiently as possible and maximise social surplus (the aspects that drive the demand to participate in this economic system). Given the permissionless nature of how Ethereum works, there are various agents whose strategic behaviour will ultimately influence how well these resources are put to work.

Therefore designing and implementing the mechanisms of Ethereum which coordinate how these resources get utilised is of great importance. If the mechanisms in place tasked with doing this are not well designed, we risk Ethereum experiencing a “tragedy of the commons” problem.

The tragedy of the commons describes a scenario in which access to a common pool of resources (a public good) is not regulated efficiently by a mechanism.

The consequence of this lack of planning can result in excessive resource consumption, poor incentives to motivate public participation in maintenance or improvement of the system, and encouragement of participation that favours short term gain over long term sustainability (myopic behaviour).

The “Tragedy of the Commons” risk can be directly applicable to the resource allocation system of Ethereum because it demonstrates that if we implement parts of this system poorly, we risk upsetting the incentive balance between various types of participants in the network which can undermine Ethereum’s security, efficiency and accessibility. In addition to this, inadequate pricing of resource usage can contribute to abuse of shared resources.

For us to prevent the tragedy of the commons, we must design the resource allocation system in such a way that the manner through which resource access is managed in Ethereum is fair, long-term sustainable, and economically productive.

To achieve this, we identify some core principles that guide the design and operation of the resource allocation system that seek to prevent the risk of falling into the “tragedy of the commons” problem:

Accurate resource pricing: Ethereum must have a system that is capable of charging users as close as possible to the social costs associated with their transactions. In order to do so, we must internalise as much of the external costs associated with transactions as possible to ensure that users bear the full cost of the burdens that having their transaction included on Ethereum has on the rest of the network and hence the shared blockchain resources.

Economically efficient TFM: Ethereum’s transaction fee mechanism should drive optimal market outcomes by providing both principals (users) and the assigned agent (block producer) with useful economic signals and tools to express preferences. The TFM should provide transparent and timely information about the current market-clearing price so that users can decisively express bids for their transactions. On the other side, the TFM should be effective at informing the block producer about how best to maximise their revenue when building a block.

Incentive-compatible TFM: Ethereum’s transaction fee mechanism should be incentive-compatible in such a way that principals (users) and the assigned agent (block producer) are incentivised to act honestly and according to the prescribed rules of the mechanism. An incentive-compatible TFM should align the incentives of both users and block producers in such a way that their dominant strategies to strictly increase their respective utilities is to act truthfully with the mechanism, demonstrating a social-welfare maximising equilibrium. This equilibrium reflects an achievement of a socially optimal outcome where no other strategic deviation will strictly benefit either of these participants more than interacting in truthful manner, therefore making the economic system of Ethereum as efficient but fair as possible.

There are, of course, much more considerations that contribute towards the prevention of the tragedy of the commons (e.g. governance mechanisms, scalability solutions, consensus protocol upgrades), but these are somewhat external to the scope of the resource allocation system and what job it has within Ethereum.

The core principles above therefore describe some of the key goals we wish to satisfy with the design of the mechanisms in the resource allocation system. Some of these goals are even dependent on the effectiveness of others, for example, accurate resource pricing guarantees that transactions costs are assigned fairly and reflect the impact they have on the network. The implementation of resource pricing, which aims to capture all dimensions of costs around transaction processing, therefore impacts how well the economic allocation outcomes guided by a transaction fee mechanism actually are. This demonstrates how accurate resource pricing is a pre-requisite to ensuring a more economically-efficient and fair transaction fee mechanism.

Having described how important these core principles are, we will observe how many of the engineering design decisions within Ethereum reflect this strong desire to be in line with these principles. In a future article, we will also see what future upgrades are actively being researched and proposed to further improve Ethereum’s resource allocation system.

Part 1: Blockchain Resources

Let us start off by familiarising ourselves with the concepts of nodes and understanding what role they play in blockchains. This will enable us to get a good understanding of the tasks they perform and uncover the types of resources they consume. These are important details to understand because they lay the groundwork for later introductions to matters of resource pricing.

By understanding the tasks that nodes perform and the resources they consume in the process, we gain valuable insight into the costs involved in running Ethereum. This knowledge, in turn, helps us understand the design decisions made around components that manage resource allocation.

Nodes (From First Principles)

A node describes a computer that participates in a blockchain network.

In decentralised blockchains, nodes operate independently, maintaining and updating their own current view of the blockchain with help of the protocol specification (being enforced by node software) and P2P network communication.

Nodes receive and broadcast messages to one another engaging in “information gossip” upon which the blockchain protocol is capable of making these independent nodes converge on a single, canonical view of the network representing the current state of the blockchain and the activities that led to it.

From the above description, we can describe Ethereum nodes in greater detail.

An Ethereum node describes a computer that participates in its blockchain. It runs Ethereum client software which implements the required functionality (following the protocol specification) to participate and interact with other reliable nodes in the network to drive Ethereum’s operation forward.

Anyone is capable of participating in the network as a node provided they have a computer with sufficient resources to run the protocol software.

An Ethereum node performs various tasks to maintain the operation of the blockchain, including participating in distributed consensus, updating state, as well as engaging in information gossip, among many other crucial jobs.

To keep this blog post as simple as possible, we consider an Ethereum node as a combination of its consensus and execution client and will not be referring to these parts individually unless specified.

Ethereum can be described as a network of independent nodes running software that execute tasks explicitly defined by the Ethereum protocol specification. The goal of the protocol is to enable nodes to independently compute the current state of Ethereum whenever an update appears and communicate with other nodes to collectively come to agreement on what the new state is and what inputs led to this update (via a distributed consensus procedure) - this agreed upon view is therefore regarded as the canonical view of Ethereum.

At a high level, this helps us understand how Ethereum’s architecture resembles that of a decentralised network (comprised of these independent nodes). Anyone is allowed to join the network as a node, where each node does their own individual processing and thereafter coordinate with one another to reach consensus on how Ethereum changed. This highlights two unique properties that help characterise Ethereum as a decentralised network: permissionlessness and trustlessness.

Permissionless: Anyone is able to run a node and participate in the network.

Trustless: Nodes do not need to trust each other or a central entity.

A consensus protocol ensures all nodes can trustlessly agree on the current the view of the blockchain history, sequence of valid transactions to execute next, as well as the result of such execution.

Another dimension of trustlessness lies with the ability of network stakeholders to run their own Ethereum nodes and independently verify statements made on the blockchain rather than querying other nodes where there is no guarantee that the information they sent is true.

We will not be going through how the consensus protocol works in Ethereum. All that is relevant to know going forward in this blog post is that it is responsible for ensuring nodes come to agreement about the global ordering of transactions from network inception as well as the result of executing them in that order.

Introducing nodes in the manner done above is important because in the next section we will begin to explore the underlying resources that drive Ethereum’s functionality and hence economic activity. At a low-level, these are the resources that nodes expend to drive network activity.

Computational Resources

A node depends on a variety of hardware resources, including CPU, RAM, disk, and network bandwidth, to perform its functions. We refer to these types of resources as computational resources.

These resources are heterogeneous, each differing in their characteristics and required by different tasks within nodes. When we say the resources are heterogeneous, what we mean is that each of these resource sub-types serve a distinct purpose and have their own specific properties and limitations. We briefly describe the purpose of each resource sub-type below:

CPU: Responsible for processing instructions. Typically measured by percentage of utilisation.

RAM: Stores data that needs to be readily accessible. Typically measured by capacity utilisation (GB).

Disk: Serves as long-term storage. We consider overall capacity utilisation (GB) as well as read/write disk I/O interactions (MB/second).

Network bandwidth: Determines the maximum rate of data transfer that a node can handle. Typically measured by data transfer rates (MB/second).

In addition to these resources being heterogeneous, they are also orthogonal. What this means is that these resources sub-types are independent of one another and thus an increase or decrease in one of their quantities does not directly influence the other resource type amounts. For example:

Increasing RAM does not directly enhance CPU processing power.

Expanding disk storage does not directly increase network bandwidth.

This orthogonality allows each resource to be considered and optimised independently (a property that is highly relevant in emerging resource pricing and TFM mechanism designs).

Properties of Computational Resources

In understanding the roles and interplay of the various computational resources within an Ethereum node, two critical aspects demand our attention: their finite nature and the varying externalities produced from their usage:

Finite nature of resources: Each computational resource sub-type is intrinsically limited. The capability of nodes to execute tasks that drive Ethereum forward are bound by this constraint.

Externalities from resource consumption: The consumption of these resources may produce hidden side effects that are experienced throughout the network, affecting its overall performance.

Finite nature of resources:

Nodes are simply physical computers therefore it is not difficult to imagine that they are constrained by the capacity of their individual hardware components.

It is in the best interest of Ethereum protocol designers to ensure it is feasible to run nodes on modest hardware and therefore they must set realistic resource consumption targets for protocol activities that balance efficiency and feasibility. This must be managed in such a way that the decentralisation properties of Ethereum are maintained.

Externalities from usage:

In this context, externalities refer to unintended side effects experienced somewhere else outside of the activity that directly consumed the resource. It is important to acknowledge that the use of a specific resource may incur hidden costs elsewhere because without considering this, the full impact of the resource’s utilisation cannot be properly understood or addressed.

It is in the best interest of Ethereum protocol designers to fully understand the impact of resource utilisation so that they design and implement the technical protocol procedures that drive Ethereum’s operation in a manner that meets expectations around efficiency and feasibility. By fully understanding the nature of these resources and the constraints we impose on them, we can make more informed decisions around how these resources should be allocated in all phases of Ethereum operation. In addition to this, we can determine how we should go about pricing access to resources demanded by transactions.

We now have a much more comprehensive understanding of the nature of the underlying resources relied upon by Ethereum. In this next section we will start to explore the processes that consume them.

How are blockchain resources used?

We are now going to take a closer look at some of the actual tasks/operations performed by nodes that consume the underlying resources described in the previous section. These tasks, each with their own unique resource requirements, form the backbone of Ethereum’s functionality. We categorise them according to the type of activities they are involved with and express them as cost classes.

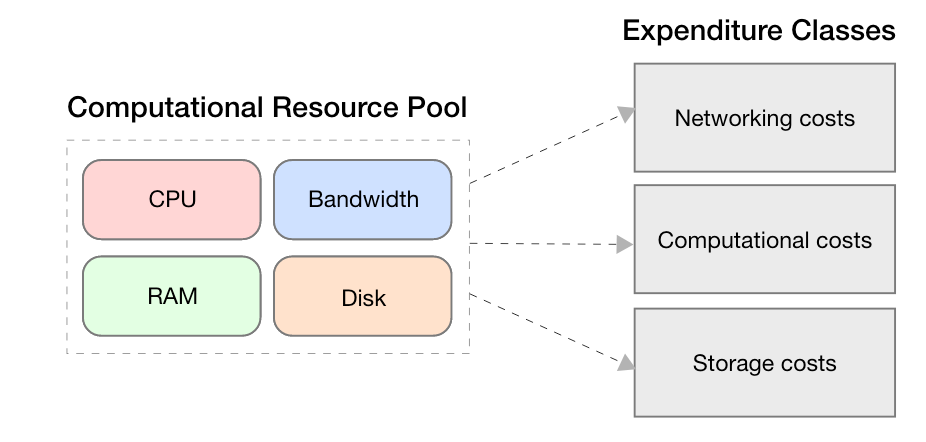

They can be categorised into three main expenditure classes - networking costs, computation costs, and storage costs.

An expenditure class can be described as a categorisation of certain operations or tasks by the type of computational activity they engage in.

Let’s look at each of these classes to get a better understanding of the specific activities they encompass:

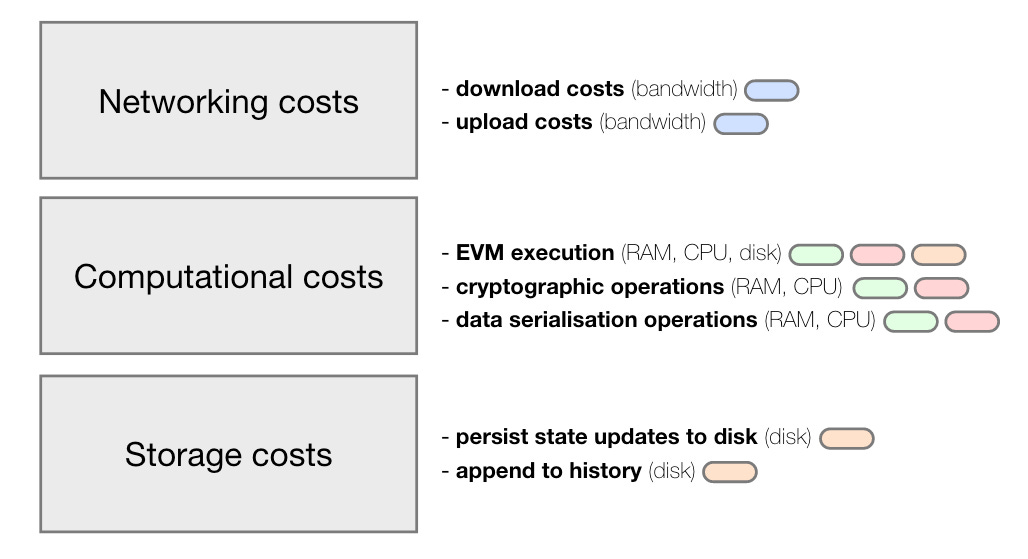

1. Networking Costs

These are tasks related to the transmission and reception of data across the network, this includes:

Initial blockchain sync: This is a once-off cost incurred when a node first joins the Ethereum network. The node is required to download all blocks and transactions from genesis to the current block, which requires significant bandwidth.

Download: Nodes need to continuously download new blocks and transactions from the gossip network to stay in sync with the latest state of Ethereum.

Broadcast: Nodes need to broadcast new transactions and new valid blocks it learns about.

2. Computational Costs

These are tasks that require computational processing, this includes.

Transaction execution: This process, in its most basic form, entails updating the account balances during an ETH transfer from one account to another. Beyond this simple case, transaction execution commonly involves interactions with smart contracts (or deployments of them). Such interactions require access to memory and disk (to copy state to the EVM execution context) in preparation of execution. The execution of these smart contract invocation utilises both CPU processing power and RAM.

Cryptographic operations: Task that requires the use of cryptographic operations to perform signature verification or generation of cryptographic hashes.

Data serialisation: Tasks that require the use of encoding/decoding operations like Recursive Length Prefix (RLP) and Simple Serialise (SSZ).

3. Storage Costs

These are tasks involved with updating storage (history, state), this includes:

Persisting state updates: After Ethereum has executed a block’s list of transactions, updated state is written to disk.

Block appending: Task that appends a new block to the blockchain. Whenever a new block is verified and added to the blockchain, it increases the history of the network. Blockchain history is “prunable” because you do not necessarily require parts of it (such as transaction bodies of previous blocks) once you have executed them to reach blockchain sync. Pruning is an important feature that makes the costs of running a full node feasible by reducing storage requirements and discarding of data that is no longer essential to the operation of the node.

Now that we have a better understanding of these expenditure classes and the kind of tasks that are performed by nodes, it is clear to see how these tasks are quite diverse and intrinsically connected to different types of computational resources. With the groundwork established, lets dive deeper into these specific tasks associated with each expenditure class and identify the particular computational resources that they consume.

The illustration above shows the three expenditure classes and the tasks we associate with them. In addition to this, we identify which computational resource types are required by each task which allows us to make the following deductions about each class:

Networking costs consumes bandwidth resources.

Computational costs consumes RAM, CPU, disk resources.

Storage costs consumes disk resources.

While the tasks classified as computational costs consumes three different computational resource types, they are still primarily characterised by their CPU usage while the other resource types assist with the execution of the tasks in more minor ways. Tasks that are classified as network or storage costs are more simple as they only consume network bandwidth and storage resources respectively.

The Block Processing Procedure

In the previous section we identified various tasks that Ethereum nodes perform that are vital to maintaining operation of the system, tasks that rely on different combinations of computational resources. In this section we will describe the group of tasks responsible for executing transactions and updating the blockchain - a higher level process we will refer to as the block processing procedure.

The block processing procedure describes a process involving a series of steps to execute a block of transactions on Ethereum.

This procedure is carried out by every node in the network whenever they receive a block over the network.

The block processing procedure is carried out by all nodes participating in Ethereum. When the block producer for the next block has picked a list of transactions for execution, they will prepare a block and broadcast it to the rest of the network allowing other nodes to execute these transactions and update the blockchain accordingly.

Earlier in this article we introduced the resource allocation system and expressed how it was designed to help Ethereum allocate its resources as efficiently as possible. How this relates to the block processing procedure is that the list of transactions produced from this system are direct inputs to the block processing procedure which uses them to update the blockchain. We illustrate the extrinsic dependence of the block processing procedure on the resource allocation system below:

Given the nature of this relationship, we will now explore how the computational resource demands of the block processing procedure can vary given the contents of a block. We will achieve this by going through all the computational steps involved in the block processing procedure.

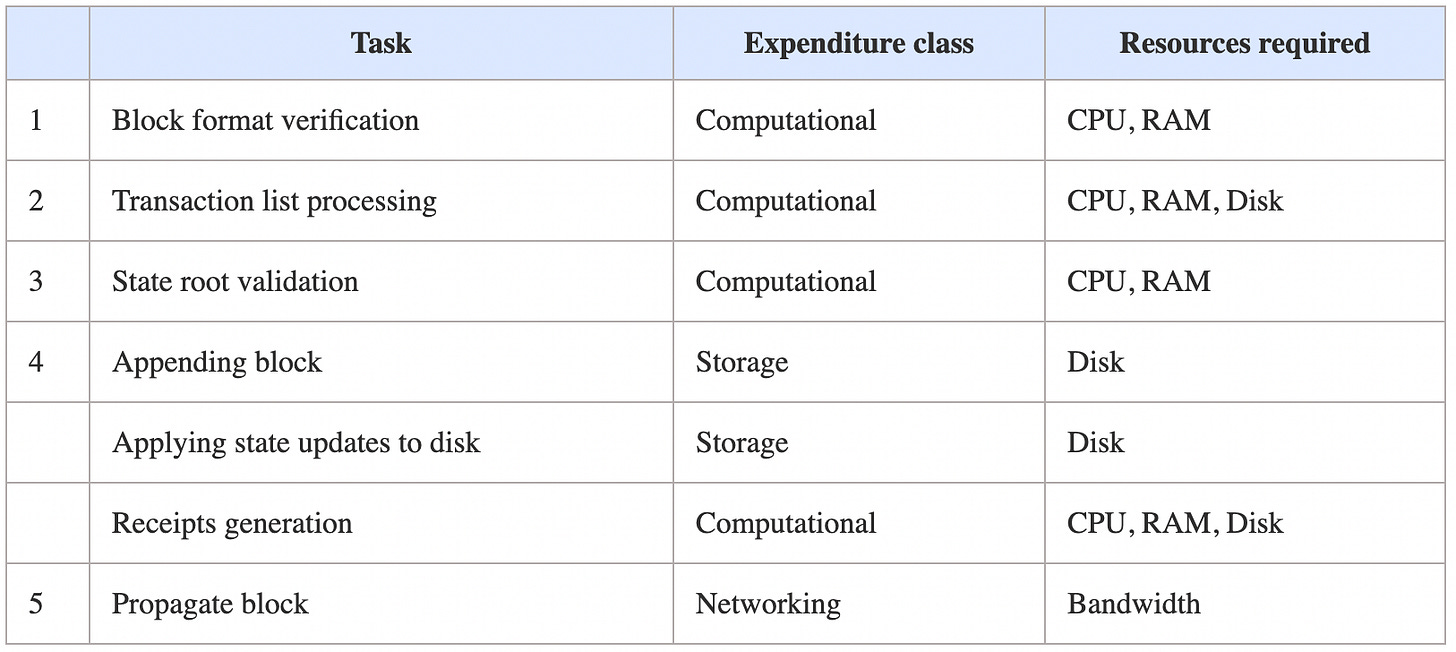

The block processing procedure starts when a node first receives a block. It then follows the steps below:

Block Format Verification: This step involves checking that the incoming block adheres to the expected format and meets the necessary field requirements, along with general validity checks (e.g. checking block timestamp is greater than its parent).

Transaction List Processing: During this step, the node fetches the relevant state from disk storage and loads it into memory. Transactions are the executed sequentially corresponding to the order specified by the block. A record of state modifications is kept in memory throughout the process.

State Root Validation: This step involves comparing the computed state root, resulting from the execution of transactions, with the state root specified in the block header. If the state roots do not match, the block is deemed invalid.

Local View Update: This step involves:

Appending the newly verified block to the blockchain.

Applying the state changes resulting from the transaction execution to disk.

Generating receipts for transactions.

Block Propagation: The final step involves broadcasting the newly processed block to other nodes in the network, contributing to the overall synchronisation of the blockchain. The node will then listen for future blocks that other nodes broadcast that build upon its latest view of the blockchain.

We identify the required resource types of each of these steps in the table below:

In the table above, we can observe how the five primary steps of the block processing procedure were listed along with the expenditure class they belong to, and the computational resources they require. We also can observe that step 4 is broken down into three parts - appending block, applying state updates to disk, receipts generation. The reason for doing so is to show that step 4 involves performing certain tasks that fall under different expenditure classes, but together they describe what is required in step 4 to update the blockchain once a block has been deemed valid.

Having identified the different computational resources required by each of these steps, we have a better understanding of the unique resource requirements corresponding to these steps. While this is certainly useful, we still don’t know what quantities of resources these steps require nor if there are dynamics at play which affect how much the resource requirements to perform these tasks may grow or whether they remain constant regardless of the block’s contents.

In order, to understand the resource consumption characteristics of these steps, we need more information. In this next section we leverage computational complexity analysis to reveal the cost trends of each of these steps.

Cost Dynamics of the Block Processing Procedure

Computational complexity describes the quantification, characterisation, and measurement of computational resources required to execute a specific process or task.

Analysing computational complexity can help us observe any behavioural phenomena that emerges during the execution of a process, providing useful insights in the form of bottleneck identification, patterns of resource use, and much more.

Computational complexity analysis of the block processing procedure will help us find out what sort of tasks executed in these steps are more resource-intensive than others as well as helping us understand how usage patterns of certain resource types can contribute towards execution bottlenecks given that these resources have unique properties and limitations.

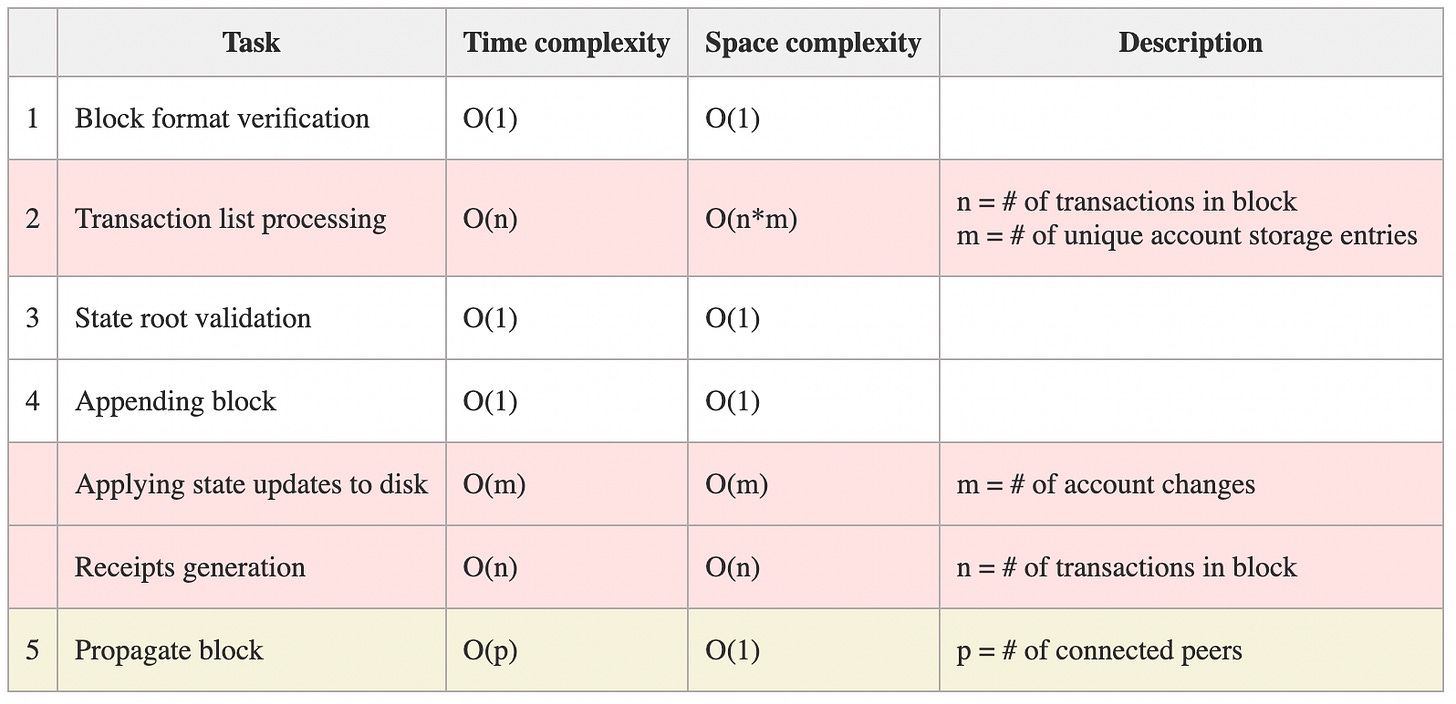

There are two aspects of computational complexity that will tell us useful things about the resources that each of these steps consume:

Time complexity: Time required to execute a given task. Time complexity is proportional to the quantity of computational resources consumed by computational processing.

Space complexity: Computer memory required in executing a given task. We typically use space complexity to describe the consumption of memory or disk resources in executing a task.

With these two aspects, we can learn a lot about the resource consumption characteristics of each step in the procedure. The table below describes the time and space complexity associated with each step:

With regards to the table results above, you don’t have to necessarily understand how we arrived at the specific complexity figures. Instead, consider them as broadly accepted assumptions that describe the resource consumption characteristics of each step.

What does the computational complexity analysis reveal?

The computational complexity analysis shows us that there are a combination of fixed and variable costs involved with the block processing procedure. Let us define what we mean by both fixed and variable costs below:

Fixed costs describe costs that do not change in relation to the volume of activity within a system. These costs are remain constant regardless of how the input to a process varies.

Variable costs describe costs that fluctuate based on the level of activity within a system. These costs typically change depending on the size, complexity, or quantity of inputs provided to a process.

These definitions help us understand what we mean by these two types of costs. Let us get a bit of a more intuitive understand of what it means for either of the two aspects of computational complexity to have these costs.

Time complexity:

Fixed costs: The amount of computational steps required in processing remains constant.

Variable costs: The amount of computational steps can grow depending on the contents of information fed into the process.

Space complexity:

Fixed costs: The amount of memory or disk space required in performing the computational task remains constant.

Variable costs: The amount of memory or disk space required in performing the computational task can grow depending on the contents of information fed into the process.

Now that we have explained what fixed and variable costs mean for both time and space complexity, let us walk through the block processing procedure step-by-step and explain the nature of computational complexity brought about by the execution of the various tasks involved:

1) Block Format Verification

This step has a time complexity of O(1) and a space complexity of O(1).

This operation has only a fixed cost.

The resources consumed during this step remains constant, irrespective of the contents of a block.

2) Transaction List Processing

This step has a time complexity of O(n) and a space complexity of O(n*m).

This operation has variable costs.

As the number of transactions (n) increase, so too does the amount of required computational processing steps.

As the number of transactions (n) and the number of unique account storage entries (m) increase, so too does the amount of required memory and disk computational resources.

3) State Root Validation

This step has a time complexity of O(1) and a space complexity of O(1).

This operation has only a fixed cost.

The resources consumed during this step remains constant, irrespective of the contents of a block.

4a) Local View Update: Appending Block

This step has a time complexity of O(1) and a space complexity of O(1).

This operation has fixed costs.

The resources required to append a new block to the blockchain remain constant.

4b) Local View Update: Applying State Updates to Disk

This step has a time complexity of O(m) and a space complexity of O(m).

This operation has variable costs.

As the number of state changes accrued during transaction execution increase, so too does the amount of computational steps and memory/disk space resources required to service the state update.

4c) Local View Update: Receipts Generation

This step has a time complexity of O(n) and a space complexity O(n).

This operation has variable costs.

Each executed transaction has a receipt generated alongside it which includes useful information such as log events and filters.

As the number of transactions (n) in a block increase, so too does the amount of required computational processing steps.

As the number of transactions (n) in a block increase, so too does the amount of required memory/disk resources to generate and store the receipts in Ethereum’s receipts trie.

5) Block Propagation

This step has a time complexity of O(p) and a space complexity of O(1).

This operation has both a fixed and variable costs.

The required computational processing steps increases with the number of connected peers a node has (p).

The required memory/disk resources remains constant because broadcasting a block does not require additional space per peer.

We have once again walked through the block processing procedure, but this time we did so in order to understand the computational complexity involved with carrying out each step. In doing so, this revealed to us that there are several fixed and variable costs that describe the manner in which various computational resources are consumed in each step, highlighting that some tasks require different resource types and varying quantities to fulfil their computational objectives.

In the next section, we will use all our findings related to the block processing procedure to motivate a need to constrain the resource consumption involved in this procedure. In doing so, we will also get an understanding of why this is important to do for a decentralised system like Ethereum.

Constraining Resource Consumption

In the previous section we found out that there are several fixed and variable costs involved in the block processing procedure. We summarise our findings in the table below:

Our analysis shows us that there are several variable costs that exist within the block processing procedure. These identified variable costs are explicitly pointed out below:

Transaction List Processing:

Variable Cost: Computational processing steps required increases as the number of transactions increase.

Variable Cost: Memory/disk resources required increases as the number of transactions and unique accounts involved in transactions increase.

Applying State Updates to Disk:

Variable Cost: Computational processing steps required increases as the number of required account state changes increase.

Variable Cost: Memory/disk resources required increases as the number of required account state changes increase.

Receipts Generation:

Variable Cost: Computational processing steps required increases as the number of transactions increase.

Variable Cost: Memory/disk resources required increases as the number of transactions increase.

Block Propagation:

Variable Cost: Computational processing steps required increases as the number of connected peers a node has increase.

Looking at the variable costs described above, we notice a common source that drives most of these costs, this being the amount of transactions involved as well as the complexity of the interactions described by them. Given this understanding, we can reason that if we do not limit the resource consumption involved with processing a list of transactions that these costs could continue to increase significantly. We can also observe that a variable cost exists in the block propagation step, however this variable cost is not relevant because the number of connected peers is not part of the protocol specification and is left up to the agency of the participant running the node, we can therefore consider this variable cost as negligible.

Consequence of Unconstrained Resource Consumption

In earlier sections we introduced the key properties of computational resources, one of which was being finite in nature:

In a decentralised system like Ethereum, it is vital that we keep the resource requirement to run nodes reasonably low and accessible. Given that we identified how transactions have a significant influence on how costly the resource consumption of the block processing procedure is, we can reason that it may be one of the greatest variable factors that determine how demanding the resource requirements to run a node are.

Therefore if we do not impose reasonable limitations on the amount of computational resources that can be expended in executing transactions and updating the blockchain accordingly, we risk many nodes running into problem like resource exhaustion due to their modest capacities of computational resources.

Resource exhaustion describes a scenario in which all available resources of a node have been fully consumed and are now depleted, resulting in the node no longer being able to function efficiently or at all.

This can happen due to hardware limitations, badly-optimised software, or network-related factors.

Given that a diverse set of nodes is crucial for ensuring changes to the blockchain are trustlessly computed and verified (via the distributed consensus process) and are thus vital to serving the network, the implication of not constraining resources will likely result in many nodes experiencing resource exhaustion and therefore dropping out the network. Participants with modest computer systems will therefore no longer be able to participate in the network by running nodes, leaving the duty of trustlessly computing the changing state of the blockchain in the hands of participants in the form of a small minority of sophisticated actors with high-performance computer systems.

In this scenario we undermine the permissionless property of being able to participate in Ethereum and trustlessly compute the state of the blockchain, and the consequence of this is that users who interact with Ethereum by sending transactions have no other option but to trust this minority set of sophisticated actors to honestly receive and broadcast their transactions as well as report the state of the blockchain.

While Ethereum still possesses the characteristics of being trustless, the barrier to entry to run a full node means this is only possible given you have extraordinary computational resources at your disposal. Given this high barrier to entry, Ethereum still technically remains permissionless and trustless, but these properties are significantly undermined - the penultimate consequence of this is that decentralisation of the entire system is undermined.

Protecting Permissionless and Trustless Properties

By now it is quite clear how severe the consequences of not constraining resource consumption of key activities like the block processing procedure can be for Ethereum. We put the diverse set of nodes at risk of running into problems like resource exhaustion, thus undermining who gets to trustlessly compute the current state of the blockchain and interact directly with it. If we do not address this resource exhaustion concern, we essentially render running a node on modest, consumer-grade hardware unmanageable. We thus regard the failure to constrain resource usage as making the ability to run a node severely unaccessible to the average person.

From all of the reasoning above, you will now understand by bounding resource consumption of critical blockchain tasks like the block processing procedure is vital to maintaining the decentralisation of Ethereum. We seek to preserve the strong guarantees of permissionless and trustless properties that have always been core principles of the network:

Permissionless: By keeping the resource requirements sufficiently modest, it remains possible for a broad range of participants with varying levels of computational resources at their disposal to run a node and interact with the network without an intermediary.

Trustless: By keeping the resource requirements sufficiently modest, we ensure that running a node is accessible therefore allowing participants to trustlessly compute the current state of the blockchain without the need for an intermediary.

Keeping the costs associated with running an Ethereum node is a crucial requirement for maintaining a resilient, censorship-resistant, decentralised network. If we cannot maintain a sufficiently decentralised network, we lose all the valuable properties of a blockchain that justifies its value proposition.

Its worth mentioning that while constraining resource consumption is important in preserving the decentralisation properties of Ethereum, it is only one of many factors that influence this property. There are various other concerns such as the barrier to entry to become a block producer and more that play an important role, but such matters are out of the scope of this article.

Practical Solutions to Constrain Resource Consumption

In order to address the risks posed by resource exhaustion, we thoughtfully place constraints on the maximum amount of computational resources that are allowed to be expended while processing a block of transactions. You can thus imagine this taking the form of some sort of computational resource provisioning, in which the impact of transaction set execution can be measured and thus have a cumulative amount of resource usage that must fall within some maximum limit specified by Ethereum through the protocol specification:

We mentioned earlier on in this section that the amount of variable costs involved with carrying out the block processing procedure depends on the amount of computational resources utilised while executing the transactions of a block and updating the blockchain accordingly. While the amount of transactions present in a block did demonstrate that if you increase the amount, so too does the resource costs of the entire procedure; but we also found out that the complexity of transactions is a decisive factor as well.

So with this information, what sort of solutions can we propose that both sets a maximum limit on resource consumption and is able to meter resource consumption?

Solution 1: Set maximum limit of transactions allowed in a block?

What if we decided to constrain the resource consumption of the block processing procedure by setting a hard limit on the amount of transactions that are allowed within a single block?

From a naive point of view, this would indeed set some constraint on resource consumption if we imagined all the transactions involved executed in timely manner. However, setting such a limit would likely encourage users to send more complex transactions ,which are very resource-intensive, to make the most of the opportunity of getting their transaction included in a block. This demonstrates one example of how such a design decision could lead to resource abuse by users. Unfortunately, this isn’t even the biggest problem, it only gets worse from here.

While the above shows that this method is inefficient, the following point of failure we are about to describe shows that Ethereum would simply stop working. Because the EVM (Ethereum’s execution environment) is capable of performing generalised computation, we can describe it as having the characteristic of being Turing-complete. The implications of this are that transactions are capable of triggering very complex execution and can potentially cause infinite loops. Therefore if Ethereum were to limit resource consumption by means of restricting the number of transactions allowed within a block, any one of these transactions could potentially exhaust a node’s computational resources thus demonstrating a resource exhaustion vector. Given the undecidable nature of the halting problem and the EVM being Turing-complete, it would be impossible for Ethereum to determine whether such invocation of transaction execution would ever terminate. This therefore demonstrates why attempting to constrain the resource consumption of the block processing procedure by means of restricting the number of transactions will not work.

This clearly is not a viable option for managing resource consumption. Given the nature of how Ethereum’s execution model works and what sort of generalised computation that transactions may invoke. We need a more nuanced solution that more closely meters the computational burdens imposed by transaction execution.

Practical Solution: Measurement System of Transaction Execution Work

By nature of design, Ethereum is a programmable blockchain. It has features that support the deployment of smart contracts, which enable participants to deploy executable programs on the blockchain. As with many computer programs, these contracts typically contain callable functions through which transactions on Ethereum are capable of calling them with or without external arguments depending on the function description. This functionality demonstrates the wide variety of blockchain execution that can be performed on Ethereum. Given this context, we can understand that transaction execution could demand resources ranging from minimal to theoretically infinite amount given the Turing-complete properties of the EVM.

Having a better understanding of the environment and dynamics at play, we need a more precise method that considers the complexity of transaction execution in order to produce a solution that can effectively constrain the resource consumption involved with the block processing procedure. In addition to this, we must also consider how the modification of state caused by transactions impacts future costs associated with transaction execution.

Ethereum therefore introduced the gas model to help address these challenges.

The gas model is a solution designed to constrain resource consumption during the block processing procedure. It introduces a unit of measure called "gas" which assigns relative computational costs to every operation in the EVM. It is with this same measure that the maximum limit of a block is expressed.

The measure of gas is introduced to Ethereum and is used to measure the computational effort involved with executing transactions and updating the blockchain. Ethereum establishes this unit of measure to assign costs to primitive computational operations used by the EVM, effectively enabling nodes to meter the computational effort involved with executing the transaction. Because the EVM is a stack based machine, the method of metering computational steps is fairly trivial and requires summing up the total amount of computational work described by the execution trace of the transaction. Ethereum therefore sets a max amount of computational effort (described as a max quantity of gas) that a block is allowed to use, therefore if the execution of transactions in a block exceed this amount - the block will be deemed invalid. In addition to this, the gas measurement system also assigns costs to overhead tasks that consume resources outside of the context of the EVM execution environment.

The diagram below illustrates the intuition around the use of the gas model to constrain and meter the resource consumption caused by transaction execution:

While the gas model does not explicitly constrain every aspect of the block processing procedure, it has helped us bound the main cause of the variable cost which we identified as being caused by transaction processing-related tasks. We can therefore think of the finite amount of gas allowed to be used in a block as achieving the same effect as constraining the heterogenous computational resources of a node, thus the introduction and use of the new measurement system has helped us tangibly achieve a desired goal:

In summary, the gas model can be considered Ethereum’s solution to bounding the resource consumption imposed by the block processing procedure. The introduction of this abstract unit of measure opens the design space for capturing and optimising the resource consumption imposed by running Ethereum.

We are not yet familiar with how exactly the gas model is implemented nor what challenges are faced in implementing such a system utilising this, but we will find out soon enough how the resource pricing component of the resource allocation system explicitly deals with this.

Reflections Leading into Part 2

Blockchains are really esoteric business, and understanding the internals is even more dialled up in this regard. With this article series I am making an attempt to express the higher level goals of Ethereum to keep in mind while dissecting the core technical systems that help realise these goals.

Understanding the nature of the resources and the approaches taken to capture the costs imposed on Ethereum in ensuring its operation and delivery of service is crucial for also understanding the approaches and future solution around design of resource pricing and transaction fee mechanisms that depend on them.

As explained earlier, this information aids directly in the design of the resource pricing and transaction fee mechanisms that aim to achieve the efficiency and sustainability goals of Ethereum as a decentralised economic system.

In the upcoming Part 2 of this article series, we will explore how the resource pricing component of the resource allocation system makes use of the introduced gas model to assign costs to transaction execution steps as well as showing how execution is metered. We will also understand the factors that influence the design of the gas system of measure.

With the foundations of our computational resource deep-dive along with our upcoming exploration of the resource pricing component, we will therefore have a strong understanding of the entire block processing procedure by the end of Part 2. Thereafter, Part 3 will show how transaction fee mechanisms deal with the principal-agent problem at hand and drive the creation of welfare-maximising block payloads which get executed in the block processing procedure.

So what all that out of the way, lets get started on Part 2: Resource Pricing (soon™️) 🫡.